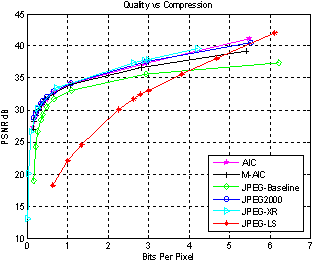

To get a perspective on where that lies, This paper has a number of graphs showing the rapid decline in quality as bits per pixel approaches zero.

So that's where things stood when I last played with it. I might be ready to have another go at it.

Before I dive into it I felt I should write about some of the things that I learned from my previous experience. Working with the evolver gave me plenty of things to ponder, and I think much of what I have learned came slowly to me during the intervening years as I thought about what it realy means.

Thinking about lossiness and expressiveness

One of the things my experience helped me with was formalising what is essentialy the obvious idea behind the process of evolving pictures. The ideal evolvable representation is a dataform which can express the widest range of images while maintaining a similarity between codes with minor differences. The second aspect of that principle is easy to accommodate, a bitmap does almost exactly this. A bitmap does a very poor job satisfying the first principle though because the range and difference required has to be considered in the space of everything that humans can perceive.Here's an example to show the problem.

| |

| Spot the Difference. A and B are one pixel different as are C and D |

The ideal image representation would be something where every codeable image formed evenly spaced points in the full field of humanly perceivable things. That is ultimately the best that any lossy data compression can achieve. Finding such a representation is difficult, if not impossible. Even identifying what is perceivable is a difficult question. At present, it is even a difficult task to evaluate just how perceptibly different two images are. Stuctural Similarity (SSIM) is used over Peak Signal to Noise Ratio (PSNR) in many tests. SSIM is still an extremely crude approximation of what happens during the interaction of the senses and the mind. To make matters worse, given that our perceptions are also colored by our knowledge, learning more about perception can alter how we perceive things.

Consider this hypothetical idea, If it were possible to calculate an evenly populated field all perceivable images you could compress any image down to any size with the best possible results. If you could populate the field with 4 billion evenly spaced points the resulting images could be represented in 32 bits. Such a compression rate would undoubtedly be extremely lossy, but from a pool of 4 billion distinct images it might get surprisingly close. This idea is the essence of lossy compression. Any lossy algorithm aims to produce data that satisfies that criteria.

If you mapped all bit combinations of data of a existing formats you would find points in the field of human perception with uneven spacing and clusters around certain zones. This is because nobody has yet invented the perfect Image compression algorithm yet. There is a good reason for that. We have no idea of what the space of human perception is. It's probably some many dimensional blob bulging in odd places. Even if we knew this, I'm not sure if there would be an accurate way of collecting data on a format short of trying every possible bit pattern. Hopefully, people way smarter than I are working on these issues.

Even though the ideal is vastly beyond our current reach, it at least can serve as a guide towards what is the right direction. Changes in data should produce perceivable differences, but to evolve, small changes should also produce similar images.

In the context of rendering images out of polygons I used irregular hexagons with a bit to encode whether or not to round the edges and a few bits to indicate a blurred edge. They were easy decisions because looking at other polygon evolvers, triangles seemed to be insufficiently expressive and images frequently have gradients which are difficult to represent with hard edged objects.

Recently I looked at doing a form of the Image renderer using a GPU shader. Instead of rendering a polygon and Gaussian blurring it, my plan was to use distance fields. When I used hexagons, the evolver managed to do some impressive tricks utilizing self intersecting shapes. It turns out computing a distance field for a self intersecting hexagon is not as easy as it sounds. While struggling with the problem, I had a small epiphany. Two triangles that can combine in multiple ways might be even more expressive. Here's where I ended up as a base gene.

red,green,blue,alpha : uint6;

a1,b1,c1 : {uint8,uint8} //triangle 1 clockwise filled anticlockwise outline.

a2.b2.c2 : {uint8,uint8} //triangle 2 clockwise filled anticlockwise outline.

falloff : uint5;

combineOp : uint3; // (or, smooth_or, subtract, union... possibly more )

Total: 16 bytes;

You can see a version trying various falloff levels on shadertoy

Thinking about Collaborative Image Compression.

When I tried evolving polygon, it was fairly evident that it would not be competitive with compression methods that aim for PSNR values over 30. The reason was obvious. at that level textures and patterns start becoming apparent which frequency based algorithms can utilize. Evolved polygons are blissfully unaware of such matters. This lead me to wonder about mixing methods.Many a naive coder has had the brilliant idea to try and improve the compression rate of lossless compression by compressing a lossy image and a lossless error map. After trying a JPEG+PNG combination they find the combination of the two images is usually more than a PNG on its own. I have seen a number of people do this, and I did it myself when I was much younger (although I did not use PNG due to it not being invented yet)

That this one way to do it does not work does not invalidate the entire method. In fact PNG itself does a simple form of this internally. The main distinction between PNG filters and storing a lossy image as a base is that the filter guesses the next pixel based upon what has already been encoded. It frequently guesses wrong, but by guessing it doesn't have to store any additional data to make the guess in the first place. Sometimes this can make things worse if the guesses are consistently wrong. The corrections might compress less well than the original data would have. Overall it tends to be a gain.

To take the step of encoding data to provide a base image is a more difficult task. For every additional byte of data you add to the first stage, you must improve the final stage by more than a byte. For lossy compression it becomes more unclear, for every byte spent, you must gain more than a byte's 'worth'. Since we are aiming for quality, the question is more to do with whether or not spending the byte early or spending it later results in the best image.

You may now see why this idea became of interest to me again after experimenting with the evolved images. The broad strokes approach of the polygon renderer exhibits the sort of profile we would look for in a first stage. It exhibits the most rapid gains towards the target image at the lowest bit-per-pixel rates.

The area that I would really like to explore is the idea of eliminating the concept of a first pass followed by a correction pass, but to see if a dynamically collaborative process could be obtained. Have a modular compression system where a compression algorithm module receives a working-image and an allocation of bytes and it tried to spend its bytes on encoding a modification to the working-image to better match the goal image.

The polygon evolver approach roughly resembles this mechanism already. Each polygon is encoded into a number of bytes and is rendered on top of the existing image. Polygons exist as 16 byte packets. Adding a polygon (after evolution) results in a net gain in image quality.

Extending this principle to multiple encoding methods has advantages and disadvantages. The advantages are obvious, using multiple techniques permits specialization . Specialization means the best performing technique can be chosen for image components. The main disadvantage is that specialization requires extra data to encode what method is chosen and where in the image that data applies.

In normal image compression some of the required information is implied by the format or context. DCT compressed images like JPEG store blocks as (usually) 8x8 cells. The same image could be encoded in a multi-algorithm image if it had a DCT module that handled a cell per data packet. The resulting image would look the same but have a larger data size. Every packet would have to encode data to say "This is a DCT cell at position x,y". JPEG gets that data for free. It's DCT because that's what JPEG does, and the position is "right after the last cell we decoded".

You could potentially squeeze that extra data into a small space, a data packet beginning single zero bit could indicate a the same type as the previous. Similarly if a DCT packet began with a zero it could indicate that it comes right after the previous cell in the picture. That would mean our hypothetical all-DCT image might only gain an additional 2 bits per cell. You wouldn't do that of course. Enabling multiple encoding techniques then using a single one eliminates all of the advantages of the system.

Where this idea would work best is for mixing a few techniques together. You may only end up with 5 DCT blocks in an image strategically placed in places where the polygonal method fails the worst. Or perhaps a glyph encoder is a better solution where it encodes on the assumption that it is encoding a bi-level or alpha-mask image where set pixels are usually by other set pixels and clear pixels are usually by other clear pixels.

Generating images using all these methods together is an interesting challenge. but I think it might be doable.

Before I dive into that part, I should first make a WebGL polygon shader evolver using my triangle pairs approach to hee how well it works.